About

![]() Welcome to my website! I'm Jan Jaap van Assen and here I share an overview of my scientific work and other projects. I’m Dutch and currently work as Lead Data Scientist at the

Netherlands Labour Authority. The Labour Authority is also part of an

ICAI (Innovation Center for Artificial Intelligence) lab called

AI4Oversight, where I’m a track supervisor and mentor PhD researchers.

Welcome to my website! I'm Jan Jaap van Assen and here I share an overview of my scientific work and other projects. I’m Dutch and currently work as Lead Data Scientist at the

Netherlands Labour Authority. The Labour Authority is also part of an

ICAI (Innovation Center for Artificial Intelligence) lab called

AI4Oversight, where I’m a track supervisor and mentor PhD researchers.

Besides this, I’m affiliated with the Perceptual Intelligence Lab at Delft University of Technology as an independent researcher. My research background is in human-computer interaction, visual perception, and computational neuroscience. I use various machine learning techniques to create models of how our visual system works. I’m interested in understanding how we visually estimate properties of objects and stuff around us.

Just so you know, I built this site to be totally cookie free. No trackers, no APIs, not even a digital crumb. Pretty rare these days, online privacy! I’ve also added a few visuals I made along the way. Some are photos from my old film cameras, and some are digital renderings.

Connect

If you have any questions or would like to get in touch, feel free to contact me at mail [at] janjaap [dot] info. You can also find me on the following platforms:

Publications

Journal Papers

2025 Mitchell J. P. van Zuijlen, Yung-Hao Yang, Jan Jaap R. van Assen, & Shin'ya Nishida. Optical material properties affect detection of deformation of non-rigid rotating objects, but only slightly. Journal of vision, 25(6), 6-6. doi:10.1167/jov.25.6.6. [PDF]

2020 Jan Jaap R. van Assen, Shin'ya Nishida, & Roland W. Fleming. Visual perception of liquids: Insights from deep neural networks. PLoS Computational Biology 16(8): e1008018. doi:10.1371/journal.pcbi.1008018. [PDF]

2018 Jan Jaap R. van Assen, Pascal Barla, & Roland W. Fleming. Visual features in the perception of liquids. Current Biology, 28(3), 452-458, doi:10.1016/j.cub.2017.12.037. [PDF]

2017 Filipp Schmidt, Vivian C. Paulun, Jan Jaap R. van Assen, & Roland W. Fleming. Inferring the stiffness of unfamiliar objects from optical, shape, and motion cues. Journal of vision, 17(3), 18-18, doi:10.1167/17.3.18. [PDF]

2017 Vivian C. Paulun, Filipp Schmidt, Jan Jaap R. van Assen, & Roland W. Fleming. Shape, motion, and optical cues to stiffness of elastic objects. Journal of vision, 17(1), 20-20, doi:10.1167/17.1.20. [PDF]

2016 Jan Jaap R. van Assen, & Roland W. Fleming. Influence of optical material properties on the perception of liquids. Journal of vision, 16(15), 12-12, doi:10.1167/16.15.12. [PDF]

2016 Jan Jaap R. van Assen, Maarten W. A. Wijntjes, & Sylvia C. Pont. Highlight shapes and perception of gloss for real and photographed objects. Journal of Vision, 16(6):6, 1–14, doi:10.1167/16.6.6. [PDF]

Please contact me for a complete CV or list of publications at mail [at] janjaap [dot] info or by LinkedIn.

Collective Flow

In 2020, I received a Marie Curie Individual Fellowship for the project "Our Elemental Sense of Collective Flow." In September 2020, I began this project at the Perceptual Intelligence Lab at Delft University of Technology.

What is it?

Collective flow consists of individual entities or agents that exhibit both collective and individual behaviours by following a coordinated set of rules. These include inanimate occurrences (e.g., shaken metallic rods, nematic fluids), microscopic instances (e.g., macromolecules, cells, bacterial colonies), and more complex manifestations involving intelligent organisms (e.g., insect swarms, bird flocks, humans).

It’s impressive how we can perceive a wide range of behaviours from even very abstract motion patterns. We can identify behaviours such as leadership, discipline, and agitation from basic collective motion. I’m also very interested in future trajectory prediction of collective flow. I built a simulator to test this in online experiments.

The Research

We developed a variety of online experiments involving tasks such as rating, naming, similarity judgements, and ordering. Some experiments allowed real-time adjustments to the simulations.

We found that while specific behaviours are recognisable, one dominant perceptual dimension relates to the agents' turning rate, which strongly influences flock grouping and uniformity. This can be directly measured by the average inter-agent distance and orientation difference. These metrics explain over 90% of the main perceptual dimension. This raises the question: how do we perceive more subtle nuances, such as emotional states, which are harder to isolate from the dominant grouping/uniformity effects?

Optical Flow

I did a postdoc in Japan, where I collaborated with Shin'ya Nishida from Kyoto University to investigate how optical flow influences perceived motion constancy across different optical material properties.

Flow Complexity

Motion constancy across varying optical properties is a difficult computational challenge under real-world conditions. Retinal optical flow changes significantly depending on the interactions between the optical material properties, object shape, and surrounding light field of moving objects. Specular and diffuse reflections, along with refractions at object surfaces, can create complex optical flow patterns that do not correspond directly to object motion.

The Research

Our results suggest that we do exhibit some constancy, but we can only partially compensate for differences in optical flow caused by varying material properties. This can lead to perceptual illusions (see video below), where motion appears different between objects with different materials or lighting, despite being physically identical. In a separate study, we investigated how these cues influence our ability to distinguish between rigid and non-rigid objects.

Liquids

During my PhD in the Experimental Psychology department at Justus-Liebig-Universität Gießen, I studied the visual perception of deformable materials. Supervised by Roland Fleming, I focused on how our visual system estimates the viscosity of liquids. I continued this work with Shin'ya Nishida at NTT Communication Science Laboratories in Japan, where I researched how neural networks inspired by our visual system estimate liquid properties.

The Studies

Fluids and other deformable materials have highly variable shapes, influenced by intrinsic properties (e.g., viscosity, velocity) and extrinsic forces (e.g., gravity, object interactions). How do we achieve viscosity constancy despite large variations in the retinal image? In this research, I identified the image cues used to estimate viscosity. We found that mid-level features (e.g., ripples, clumping, spread, piling up) are crucial for viscosity constancy across diverse contexts. These features are difficult to quantify using image statistics or 3D measurements, as they vary in orientation, scale, and local appearance. In a follow-up study, we used deep learning models to gain insights into higher-order, context-invariant image-based features.

The Stimuli

During my PhD, I spent significant time setting up a technical pipeline to generate stable, precise, and realistic liquid stimuli. I completed a three-month secondment in Madrid at Next Limit, where I learnt to use their particle simulation software RealFlow, developed for the VFX industry. The stimuli were rendered using Maxwell Render. The computational cost was high, so I wrote custom scripts to distribute calculations across multiple systems and clusters.

To train the neural networks, I procedurally rendered a training set of over 2 million images, or 100.000 short videos. Multiple parameters were scripted to create a diverse set of liquid interactions at sixteen different viscosities. The training set is available on Zenodo.

Gloss

For my Master’s degree, I completed a research project on gloss perception in the Perceptual Intelligence Lab at Delft University of Technology with Sylvia Pont and Maarten Wijntjes.

The Study

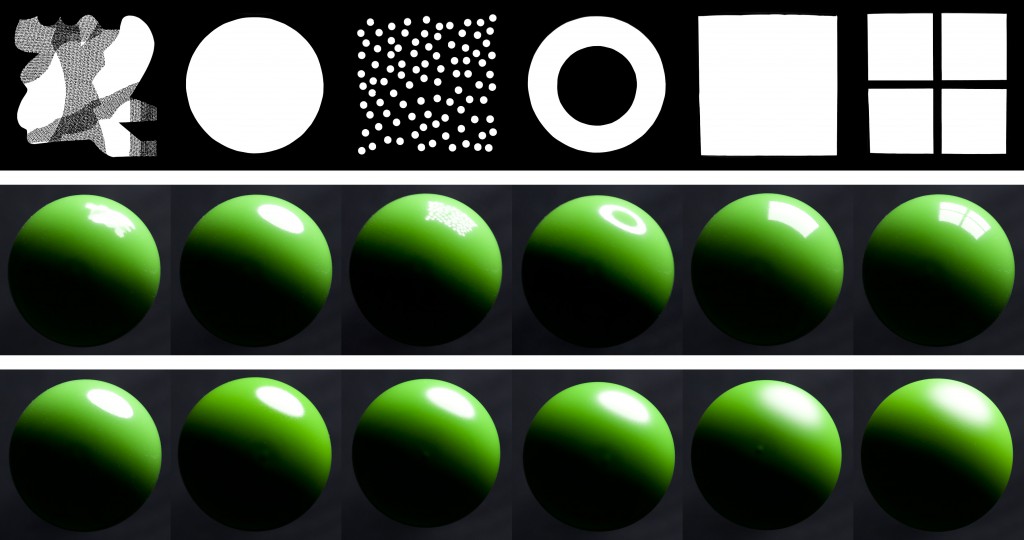

We investigated the influence of the spatial structure of illumination on gloss perception. The inspiration came from artworks such as Vermeer’s paintings, where simplified highlight shapes are used to depict real-world scenes. We found that complex highlight shapes resulted in a less glossy appearance than simple shapes like discs or squares.

The Stimuli

A diffuse light box with various shaped masks was used to produce six different highlight shapes. Inside the box, spherical stimuli were painted with six levels of glossiness, resulting in a set of 36 stimuli (6 shapes × 6 gloss levels). Observers rated glossiness based on both real stimuli and photographs displayed on a calibrated monitor.